Reprinted here with permission of Steve Freegard of Fort Systems Ltd. (Original article)

Greylisting… Revisited

by Steve Freegard

Our BarricadeMX products have supported greylisting since they were first released. From the beginning, our greylisting used custom modifications to make it more acceptable to businesses and to address some of the original method’s shortcomings.

As we have started working on what will become the 3rd version of the BarricadeMX SMTP engine; I started by looking back at support issues over the past few years to see what we could do better and decide what should and should not be present in the new version.

As part of that review process I started looking again at greylisting; should we keep it or should it go? I had found a number of tickets where we had advised customers to whitelist servers because they did not handle greylisting at all. But was this reason enough to get rid of it completely? Is it still effective?

For the purpose of this paper, it is assumed that the reader has an understanding of the Simple Mail Transfer Protocol (SMTP), the Domain Name System (DNS), and related terminology.

Background

Greylisting has been around since 2003 when Evan Harris wrote the original

whitepaper on it as a spam filtering mechanism.

The basic premise was that during the SMTP connection for each connecting IP address, sender, and recipient a tuple is created in a database and the recipients deferred (with a temporary failure) for a fixed period of time. If the same tuple comes back after that period of time had passed, then the recipients are passed and the message accepted. The idea being that if the sending system implements a retry-queue it would pass the greylisting after a period of time, while spam engines would not because they do not implement retry queues, but instead work in a fire-and-forget fashion.

The issues with this approach were that using the proposed tuple of IP, sender, and recipient caused mail to be delayed excessively for a period of time until the greylisting system had ‘learnt’ who are the regular correspondents. Worse still, SMTP servers can have wildly varying timeouts meaning some will retry after a few seconds, others might take hours to retry meaning that genuine mail can be delayed for very long periods.

The other issue was with SMTP implementations that use a shared spool for the retry-queue; this means that while one host might send the initial message, another host on a different IP might send the retry; therefore the greylist tuple will not match this retry again causing severe delays.

Many implementations were created with various workarounds for these shortcomings.

Many people have spoken out against greylisting and have speculated that like all anti-spam mechanisms it will become less effective over time, because the spammers will adapt to it. So in 2011, eight years after the original whitepaper was published, has this happened?

Testing

Because traditional greylisting sends a temporary failure to each recipient, quantifying how many messages this actually equates to is impossible. So for the purposes of this test I will be sending the temporary failure at the end of DATA so that I may count unique messages and track the retries of each message. This comes with a big caveat – bitter experience with SMTP implementations tells me that greylisting a message at the end-of-data phase instead of each recipient can and probably will cause different results in the real world, because some SMTP implementations handle retries differently depending where the deferral is done and what deferral code was used.

As noted in the ‘background’ section above, using the IP address as part of the tuple causes problems with shared-spools. Many greylisting implementations therefore only use the first 3 octets of the IPv4 address to treat a single /24 as one entry. The problem with this approach is that some shared spools might use more than one /24 or there might be multiple unrelated hosts in that same /24; either way this could also cause some undesired results. To overcome the issues with shared spools I will use a different approach:

The full IPv4 or IPv6 address will be used if:

-

The connecting host has no PTR record, a.k.a. reverse DNS (rDNS).

-

The rDNS record contains the first two or last two octets of the IP address.

-

The rDNS record contains the ‘short’, decimal, or hex representation of the full IP address.

-

Multiple rDNS records are returned.

-

The rDNS record cannot be verified by forward confirmation (e.g.

FCrDNS).

-

The top-level-domain (TLD) used is not valid.

Otherwise the first label, host part, of the rDNS is stripped, but only until the domain boundary will be used. For example:

|

rDNS

|

Greylist key used

|

|

host.sub.domain.com

|

sub.domain.com

|

|

host.domain.com

|

.domain.com

|

|

domain.com

|

domain.com

|

For the remainder of this paper I will refer to this hybrid IP / rDNS key as the ‘hostid’.

This method presumes that shared spool hosts will always have valid rDNS and that the pool of hosts will also either share a common sub-domain or domain. Experience of using this method in our BarricadeMX products has proven this to be the case.

Here is an example of this from the results, this shows a single message being received and greylisted and each of the retries showing the time difference between the last retry and the IP address of the host sending the message In this case the hostid is ‘.obsmtp.com’ (Outblaze):

|

Retry

|

Time Diff.

|

IP address

|

|

1

|

0

|

64.18.3.98

|

|

2

|

68

|

64.18.3.98

|

|

3

|

67

|

64.18.3.86

|

|

4

|

65

|

64.18.3.88

|

|

5

|

65

|

64.18.3.88

|

|

6

|

66

|

64.18.3.36

|

|

7

|

68

|

64.18.3.36

|

|

8

|

65

|

64.18.3.22

|

|

9

|

65

|

64.18.3.26

|

|

10

|

66

|

64.18.3.90

|

|

11

|

66

|

64.18.3.100

|

|

12

|

66

|

64.18.3.32

|

|

13

|

67

|

64.18.3.24

|

|

14

|

65

|

64.18.3.86

|

|

TOTAL

|

859

|

|

As can be seen, multiple retries from a pool of hosts sharing a spool are easily handled by one greylist ‘hostid’ with a total greylist delay for this message of 859 seconds. My only comment regarding this is that the retry interval used here is rather short with no back-off.

The greylist tuple I am going to use for the purposes of this paper and so that I can track unique messages and their retries will be:

|

hostid

|

As described above

|

|

sender

|

The envelope sender as sent in MAIL FROM:

|

|

recipients

|

All envelope recipients as sent in RCPT TO:

|

|

message ID

|

The Message ID header if present. If missing the ‘md5’ field will be used and ‘@my.hostname.tld’ suffixed to the end.

|

|

md5

|

The md5 of the message body.

|

The other deviation from the original paper is to prevent excessive delays for each hostid. All that greylisting proves is that a host (or group of hosts) correctly implements a retry-queue. Once that has been proven then that ‘hostid’ is exempted from further greylisting for 40 days since it was last seen. This is done to reduce the impact and delays that greylisting can cause. Although I have not done so in this test I also recommend that hosts listed on the public DNS whitelists such as the DNS Whitelist (DNSWL) be exempted from greylisting for the same reason.

To prevent spammers from exploiting this exemption by continuously cycling through their sender, recipient, and template lists in a effort to defeat greylisting and/or be exempted, a ‘hostid’ must retry either the first or the last message that has been seen from that given ‘hostid’ (this is to handle last-in-first-out and first-in-first-out queue strategies), until that time any other messages will be greylisted.

I also had to choose what timeout periods I would use:

850 seconds for the greylist deferral period. This was chosen because a Wikipedia article on greylisting shows the default retry times for various MTAs with the average 1st retry being around the 15 minute mark, 850 seconds prevents a host retrying every minute from passing before this.

I also believe that 15 minutes is a good margin for a DNS blacklist (DNSBL) to see the message from an IP (e.g. infected, malicious, hacked or other), list it, sync the list with any mirrors and allow for a negative cache TTL to expire. The theory being that if host is greylisted and is not already listed on a DNSBL, then prior to accepting mail from them, they could be subsequently black listed. This theory maybe the subject of a future article.

25 hours before greylist deferral records are removed. This was chosen in case someone had set their queue retry timer to 24 hours, unlikely, but you never know. I just wanted to pick an arbitrarily big number. I can revisit this choice based on the results in my conclusions.

Based on the 25 hours before any greylist records are removed, I decided that the test would run for a total of 50 hours. The first 25 hours would allow any messages through as normal subject to the rules of greylisting, after which I would only allow through messages for hosts that had outstanding greylist records to give them the chance to pass the test, no other messages would be allowed or tracked after this time to allow the test to finish gracefully.

I should also mention the mail stream that I am running this test on: it’s an old 3-letter domain that was first registered in 1989, but has been out of use for the past 10 years or so and has no real users present on it. I spent a lot of time generating addresses that were harvested and sold on a few years ago, so it gets a quite a lot of botnet spew, mainsleaze and the occasional bit of misdirected mail.

Results

|

|

Messages

|

%

|

|

Rejected

|

71,638

|

98.5

|

|

Lost

|

34

|

0.04

|

|

Accepted

|

1,041

|

1.43

|

|

TOTAL

|

72,713

|

|

Lost is the count of the number of messages that were not retried after the hostid that sent them had passed the greylisting test.

These results were quite a surprise to me. Prior to starting this test I expected the numbers to be considerably lower. With this in mind I decided to analyse the results further to verify the outcome.

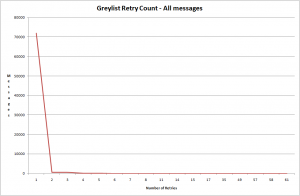

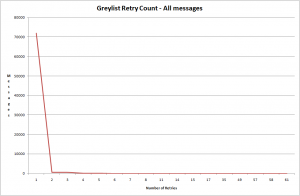

I started by analysing the number of retries seen for each message for all messages that had been seen during the test. The graph speaks for itself:

It shows that nearly all messages that failed greylisting made only a single delivery attempt.

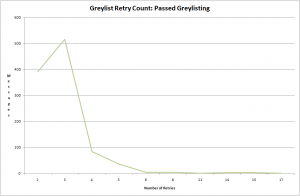

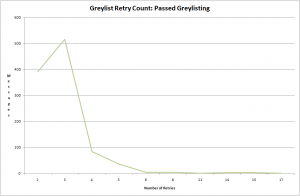

For comparison – here is the same graph, but showing only messages that passed greylisting:

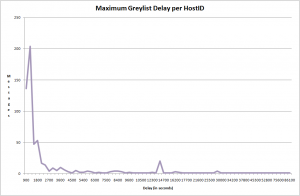

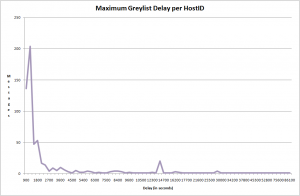

And here is a graph that shows the maximum delay caused by greylisting:

This shows the vast majority of messages were initially delayed for less than an hour.

Extremes

The graphs above show some big numbers at the extremes. I decided to investigate these.

SpamAssassin Mass Checks

I ran a SpamAssassin mass-check for each message class that I had collected from the test. This produced some interesting results, but it should be noted that the mass-checks were run after the test was complete. That means that some of the messages checked could have been over 50 hours old and would most likely have scored lower at the time the message was received.

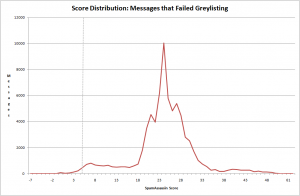

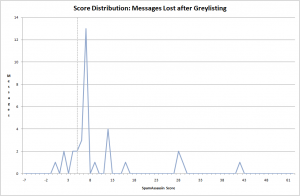

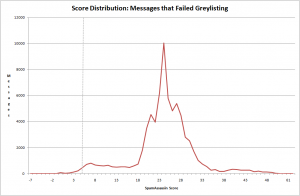

I started with all the messages that had failed to greylist properly:

This shows 0.6% of the messages were underneath the SpamAssassin threshold of 5. I manually inspected these messages and found only two that I would consider a false-positive, the rest were all false-negatives.

Both of the false-positives were a newsletter from the New York Post (Subject: Daily Newsletter). Upon inspection both of these messages were only attempted to be delivered once. The Received header shows that Postfix was the MTA used to deliver the message, so it must have been configured to do this is not the default behaviour. The messages were sent from IP 209.73.248.15, which is listed on the DNSWL.

The mass-check results also show a high overlap with DNSBL / URIBL services:

|

Rule

|

%

|

|

RCVD_IN_BRBL_LASTEXT

|

98.160

|

|

RCVD_IN_XBL

|

95.977

|

|

URIBL_DBL_SPAM

|

90.352

|

|

URIBL_JP_SURBL

|

89.991

|

|

URIBL_WS_SURBL

|

89.873

|

|

URIBL_AB_SURBL

|

84.937

|

|

RCVD_IN_PSBL

|

76.349

|

|

RCVD_IN_RP_RNBL

|

69.914

|

|

URIBL_SBL

|

68.271

|

|

RCVD_IN_PBL

|

68.152

|

|

URIBL_SC_SURBL

|

55.040

|

|

RCVD_IN_BL_SPAMCOP_NET

|

52.021

|

|

RCVD_IN_SORBS_DUL

|

22.286

|

|

URIBL_PH_SURBL

|

12.720

|

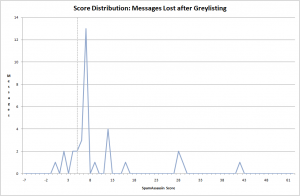

Here is the score distribution of messages that were not retried after the host sending the messages had passed the greylisting test with another message. As there were only 34 of these I manually looked at all of them and found them all to be spam.

I would speculate that some of these messages were not retried as the hosts were found to be compromised and the messages were subsequently deleted from the queues. On previous trial runs of this test I found evidence that Google did this when it shut down compromised accounts.

Again I found a significant overlap with DNSBL / URIBL services in the mass-check results:

|

Rule

|

%

|

|

RCVD_IN_BRBL_LASTEXT

|

87.879

|

|

URIBL_DBL_SPAM

|

81.818

|

|

URIBL_RHS_DOB

|

39.394

|

|

RCVD_IN_RP_RNBL

|

39.394

|

|

URIBL_JP_SURBL

|

36.364

|

|

URIBL_AB_SURBL

|

21.212

|

|

RCVD_IN_PSBL

|

15.152

|

|

URIBL_OB_SURBL

|

12.121

|

|

RCVD_IN_XBL

|

12.121

|

|

URIBL_WS_SURBL

|

12.121

|

|

RCVD_IN_BL_SPAMCOP_NET

|

9.091

|

|

URIBL_SBL

|

9.091

|

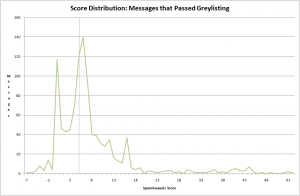

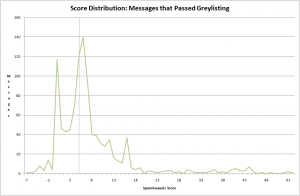

And finally here is the score distribution of the messages that passed greylisting:

As the mail stream I’m testing this on predominantly receives spam, it was not much of a surprise that a high proportion of these messages were considered to be spam. Here is the overlap with the DNSBL / URIBL services from the mass-check results:

|

Rule

|

%

|

|

RCVD_IN_BRBL_LASTEXT

|

64.808

|

|

URIBL_DBL_SPAM

|

52.692

|

|

URIBL_RHS_DOB

|

25.673

|

|

RCVD_IN_PSBL

|

25.192

|

|

URIBL_WS_SURBL

|

20.000

|

|

URIBL_JP_SURBL

|

14.712

|

|

RCVD_IN_RP_RNBL

|

12.500

|

|

URIBL_OB_SURBL

|

11.827

|

|

URIBL_AB_SURBL

|

7.115

|

|

URIBL_SBL

|

6.346

|

|

RCVD_IN_BL_SPAMCOP_NET

|

5.577

|

|

RCVD_IN_XBL

|

5.385

|

|

RCVD_IN_SBL

|

4.712

|

Overheads

The database that I used for this test recorded a single greylisting record for each greylist tuple which corresponds to a unique message, and a separate table tracked the retries for that tuple. This allowed me to track the bandwidth overhead associated with greylisting at the end-of-data in this way.

If all messages greylisted during this test were allowed through without being greylisted, the total size of the message data accepted would have been 151 MB excluding any protocol overheads. With greylisting this rose to 210 MB which is a 39% increase. However greylisting prevented 130 MB of traffic from being content scanned, an intensive activity, which is an 89% decrease in volume.

Queue strategies

In the ‘Testing’ section above, I mentioned the requirement that a hostid should not be able to pass greylisting until it had retried the first or last message (e.g. LIFO or FIFO) that it had sent to prevent spammers from passing the greylisting via a ‘brute force’ retry method. This would also allow an optimisation to reduce the amount of bandwidth used by moving the greylisting deferral back to the RCPT TO: or DATA stage if the sender and recipients do not match either the first or last greylist tuple for that hostid.

However, while doing initial testing and removing any bugs from my greylist plug-in, I observed that several messages from free-mail hosts were ‘lost’ and never retried after the hostid had passed the test. Upon investigation, these messages were all spam and I concluded that they were never retired because the abuse was detected and the accounts that sent them were either disabled or deleted and any messages in the queues for these accounts were de-queued.

If one of these messages deleted from the queue had been either the first or the last message seen by the greylisting plug-in, then all mail from that hostid would have been delayed considerably as the hostid would not be able to pass the test for over 25 hours (in the case of a FIFO queue strategy). I also realised that I commonly ran Sendmail queues on overloaded systems with ‘sendmail -qp -OMinQueueAge=15m -OQueueSortOrder=random’ which would also fall foul of this requirement.

The data collected for this paper showed that only 6 messages were rejected because of this requirement that would otherwise have been accepted. All of the messages were considered to be spam by me and by SpamAssassin.

Based upon these observations I would not recommend making the queue strategy a requirement to pass greylisting at this time.

Conclusion

The numbers I think speak for themselves, even after eight years greylisting is still effective. With modifications to the greylist tuple used, such as the hostid, exempting hosts that finally pass greylisting, and skipping greylisting for hosts on DNS whitelists it can be effective without adversely delaying genuine mail.

It can also significantly reduce the amount of external network look-ups required and reduce the CPU cost of content filtering until a host has passed greylisting allowing greater scalability at the expense of extra bandwidth.

Critics of greylisting as an anti-spam technique would say that it can cause valid mail to be lost, I would counter this argument and say that any e-mail server or web application that cannot correctly handle temporary failures as required by the RFC is going to be terminally unreliable anyway.

While it might not be for everyone and it will still require some hosts to be manually exempted. Based on these results greylisting will definitely be in the next version of BarricadeMX and our customers can choose for themselves if they want to enable it or not.

Thanks

The original idea for greylisting using part of the PTR record was made by Anthony Howe. My thanks to Stephen Swaney, Randolph Langley and Anthony Howe for editing and contributing changes to this paper.

Copyright 2011 by Fort Systems Ltd. All rights reserved.

May not be reprinted without permission.